In the ever-evolving world of cybersecurity, learning from past mistakes is not just a necessity—it’s a strategic advantage. Today, I’m thrilled to sit down with Simon Glairy, a renowned expert in insurance and Insurtech, who brings a unique perspective to risk management and AI-driven risk assessment. With years of experience in navigating complex security challenges, Simon has helped countless organizations turn vulnerabilities into strengths. In our conversation, we’ll explore the hidden value in security incidents, uncover the common pitfalls that lead to breaches, and dive into practical strategies for building cyber resilience. From human error to cutting-edge tools like Endpoint Detection and Response, Simon shares insights that every business leader needs to hear.

How can a security incident, despite the immediate chaos it causes, become a turning point for strengthening a business in the long term?

Well, Abigail, a security incident is often a wake-up call. It exposes weaknesses that might have gone unnoticed for years. While the initial focus is on containment and recovery, the real value lies in the lessons learned. By thoroughly investigating what went wrong, businesses can pinpoint gaps in their processes, technology, or even culture. This isn’t just about fixing a problem—it’s about building a roadmap for resilience. I’ve seen companies emerge stronger after a breach because they used the incident to overhaul outdated systems, retrain staff, and implement stricter policies. It’s like rebuilding a house after a storm; you don’t just patch it up, you fortify it against future disasters.

What role does human error play in security breaches, and how often do you see seemingly small mistakes lead to big problems?

Human error is one of the leading causes of breaches, and it’s often the simplest oversights that create the biggest headaches. I’m talking about things like misconfigured permissions on an app, forgetting to enable a firewall, or even leaving a password on a sticky note at a desk. These might sound trivial, but they’re open invitations to attackers. In my experience, I’d say a good 60-70% of incidents I’ve analyzed had some element of human error at the root. It’s not about blame—it’s about recognizing that people are often the first line of defense, and without proper training or awareness, they can unintentionally become the weakest link.

Can you explain how weak entry points in a system create opportunities for attackers, and share an example of a common oversight that opens the door to a breach?

Weak entry points are like unlocked windows in a house—if they’re not secured, anyone can slip in. In a digital context, these are often areas where authentication isn’t robust, like a lack of multi-factor authentication (MFA) on critical applications. Without MFA, a single stolen password can grant an attacker access to an entire network. A common oversight I’ve seen is employees reusing passwords across personal and work accounts. If their personal account gets compromised in a data leak, attackers can try those same credentials on corporate systems. It’s a simple mistake, but it can cascade into a full-scale breach if entry points aren’t locked down.

Why is outdated software such a persistent vulnerability for businesses, and how frequently does it contribute to security incidents in your experience?

Outdated software is a goldmine for attackers because it often contains known vulnerabilities that haven’t been patched. Hackers actively scan for unupdated systems, knowing they can exploit flaws that developers have already fixed in newer versions. It’s like leaving your front door wide open with a sign saying, “Come on in.” In my work, I’d estimate that outdated software plays a role in about 40% of the incidents I’ve investigated. The challenge for businesses is often resource constraints—updating systems takes time and money—but the cost of a breach far outweighs the investment in staying current.

How do phishing schemes manipulate employees into compromising security, and why are they such an effective tactic for attackers?

Phishing schemes are all about deception. They prey on human psychology, often using urgency or fear to trick employees into clicking malicious links or sharing credentials. For example, an email might look like it’s from the IT department, warning that your account will be locked unless you “verify” your password right away. Before you know it, you’ve handed over access to an attacker. They’re effective because they don’t rely on breaking through technical defenses; they exploit trust and distraction. In today’s fast-paced work environments, it’s easy to miss the red flags, which is why phishing remains a top entry point for attackers.

When investigating a major security incident, why is constructing a detailed timeline of events so critical to understanding what happened?

A timeline is the backbone of any incident investigation. It helps you map out when the breach was detected, how it started, and how long it went unnoticed. This isn’t just about documenting facts—it’s about identifying patterns and missed opportunities for intervention. For instance, if you see that suspicious activity was logged days before anyone acted, you know there’s a gap in monitoring or response protocols. Without a clear timeline, you’re piecing together a puzzle in the dark. I’ve found that this step often reveals systemic issues that go beyond the incident itself.

What’s your approach to assessing the damage after a breach, and which areas do you prioritize during this process?

Assessing damage is about getting a full picture of the impact, both immediate and long-term. I start by identifying what was compromised—whether it’s sensitive data, misconfigured systems, or disrupted operations. Then, I look at financial losses, like downtime costs or potential fines. But I also consider less tangible impacts, like reputational harm or loss of customer trust. Prioritizing data loss is key because it often dictates legal and compliance obligations. I’ve worked with businesses where a breach exposed customer information, and the fallout wasn’t just monetary—it eroded years of goodwill. So, you have to weigh every angle to understand the true cost.

Uncovering the root cause of an attack can be complex. Can you walk me through the steps you take to get to the bottom of the issue?

Finding the root cause is like detective work. I start by analyzing logs and system activity to trace the attacker’s path. Was it a phishing email that led to malware? Or a vulnerability in a third-party tool? I also interview staff to understand any unusual activity they might have noticed. Often, the root cause isn’t a single event but a chain of failures—like weak passwords combined with a lack of monitoring. The goal is to dig beyond surface-level symptoms to find systemic issues. I’ve had cases where the root cause was buried in a misconfigured server that hadn’t been touched in years. It takes patience, but it’s the only way to prevent recurrence.

Why is it important to investigate the role of third-party vendors in a security breach, and how do you approach that part of the analysis?

Third-party vendors are often the blind spot in a company’s security posture. If a vendor’s system is compromised, it can become a gateway to your own network, especially if they have direct access or shared credentials. I always check whether a breach originated from a vendor’s end by reviewing access logs and communication records. I also ask pointed questions about their security practices—do they use MFA? Are their systems patched? I’ve seen incidents where a small vendor with lax security became the entry point for a major attack. It’s a reminder that your security is only as strong as the weakest link in your supply chain.

What’s your process for documenting findings after an incident, and why is evaluating the effectiveness of your response so essential?

Documenting findings is about creating a clear record of what happened, why it happened, and how you responded. I compile detailed reports with timelines, evidence, and analysis of compromised systems. This isn’t just for internal use—it’s often required for legal or regulatory purposes. Evaluating the response is just as important because it shows where your incident handling succeeded or fell short. Did alerts go unnoticed? Was communication slow? I’ve found that post-incident reviews often reveal gaps in training or tooling that can be addressed before the next crisis. It’s about turning hindsight into foresight.

Looking at ways to boost cyber resilience, why is keeping software and systems updated such a fundamental step in preventing future attacks?

Keeping software updated is non-negotiable because updates often include patches for known vulnerabilities. Attackers are quick to exploit outdated systems, knowing that many businesses lag behind on updates. I’ve seen cases where a simple patch could have prevented a multi-million-dollar breach. Updates aren’t just about security—they also improve performance and compatibility. The key is to make patching a routine part of operations, not an afterthought. It’s one of the easiest ways to close doors that attackers are already knocking on.

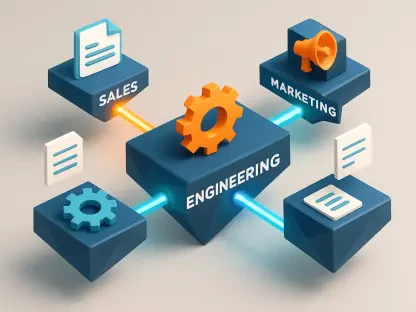

Can you explain the concept of network segmentation and how it helps minimize damage during a security breach?

Network segmentation is about dividing your network into smaller, isolated sections. Think of it as building walls inside a ship—if one compartment floods, the others stay dry. In a breach, segmentation limits how far an attacker can spread. For example, if they gain access to a marketing server, they can’t easily jump to financial systems if those are on a separate segment. I’ve worked with companies that avoided catastrophic damage because segmentation contained the attack to a single department. It’s not foolproof, but it buys critical time to respond.

How does consistent monitoring of business networks help in detecting threats early, and what tools do you find most effective for this?

Consistent monitoring is like having a security camera running 24/7—it catches suspicious activity before it turns into a full-blown crisis. By tracking network logs and user behavior, you can spot anomalies, like unusual login attempts or data transfers. I often recommend tools like Security Information and Event Management (SIEM) systems, which aggregate and analyze data in real-time to flag potential threats. I’ve seen monitoring save the day when it detected ransomware encrypting files in the early stages, allowing the team to isolate the affected system. Early detection is everything in minimizing damage.

Why is monitoring particularly crucial for businesses that must adhere to regulatory compliance standards?

Monitoring is a cornerstone of regulatory compliance because most standards—like GDPR or HIPAA—require businesses to actively protect sensitive data. Regulators want to see that you’re not just reacting to breaches but proactively watching for threats. Without monitoring, you can’t prove due diligence, which can lead to hefty fines or legal action post-incident. I’ve advised clients in regulated industries where monitoring wasn’t just a best practice—it was a legal necessity. It’s about accountability as much as security, showing that you’re doing everything possible to safeguard information.

What’s the significance of Endpoint Detection and Response (EDR) solutions in securing a network, especially with the rise of remote work?

EDR solutions are critical in today’s environment, where employees connect from laptops and mobile devices across various locations. They monitor endpoints—think individual devices—for suspicious activity, like malware or unauthorized access, regardless of where the device is. With remote work, you can’t rely on a traditional perimeter defense; endpoints are the new battleground. EDR provides visibility into these scattered connections and can automatically isolate a compromised device. I’ve seen EDR tools catch threats on a remote worker’s laptop that could have spread to the corporate network if left unchecked. It’s a game-changer for distributed teams.

How do EDR tools actively hunt for threats, and can you share an example of how they’ve made a difference in a real-world scenario?

EDR tools don’t just wait for threats—they actively search for them by analyzing patterns and behaviors across endpoints. They use algorithms to detect anomalies, like a device suddenly downloading large files at odd hours, and can correlate that with known threat indicators. In one case I worked on, an EDR tool flagged a seemingly harmless process on an employee’s device as potential malware. It turned out to be a sophisticated trojan that hadn’t yet triggered any damage. The tool isolated the device and allowed us to neutralize the threat before it spread. That proactive hunting capability is what sets EDR apart.

What’s your forecast for the future of cybersecurity resilience, especially as threats continue to evolve?

I believe the future of cybersecurity resilience lies in automation and integration. As threats become more sophisticated, manual responses won’t keep up. We’ll see greater adoption of AI-driven tools that predict and prevent attacks before they happen, not just react to them. Integration between systems—like EDR, SIEM, and cloud security—will be key to creating a seamless defense. But the human element will remain critical; no tool can replace a well-trained team. My forecast is that businesses investing in both technology and people will be the ones best equipped to handle whatever comes next. It’s an exciting, if challenging, road ahead.