With a deep background in InsurTech and a keen focus on the intersection of artificial intelligence and risk management, Simon Glairy has become a leading voice in navigating the complex world of modern insurance modeling. Today, we delve into one of the industry’s most pressing challenges: the balancing act between the demand for highly accurate predictive models and the non-negotiable requirement for regulatory transparency. We’ll explore how traditional modeling techniques are buckling under the pressure of complex data, the promise of automated tools that enhance rather than replace established methods, and what this evolution means for the future roles of actuaries and data scientists.

Modeling teams in financial services face a persistent tension between the need for predictive accuracy and regulatory demands for transparency. How does this challenge specifically impact pricing and risk functions, and what are the practical limitations of classic GLMs that teams must manually work around?

It’s a tension you can feel in almost every modeling team meeting. On one side, you have business leaders pushing for more predictive power to stay competitive, asking us to wring every last drop of insight from our growing datasets. On the other, you have the compliance and regulatory teams reminding us, quite rightly, that every single decision, especially in pricing and risk, must be transparent and defensible. The classic Generalised Linear Models, or GLMs, have been the industry standard for decades precisely because they satisfy the regulators; they are trusted and their outputs are clear. But their simplicity is also their weakness. The real world is messy and non-linear. A classic GLM tends to flatten these complexities, often missing crucial interactions between variables. To compensate, actuaries spend an enormous amount of time on manual workarounds—painstakingly binning variables, designing spline structures, and using their own experience to guess where important interactions might lie. It’s an inefficient, often subjective process that forces a frustrating compromise between a model’s performance and its explainability.

Enhancing traditional GLMs often involves manual processes like binning variables and hypothesizing interactions. Could you describe this time-consuming workflow and explain how subjective judgments made during this process can create inconsistencies across different teams or models?

It’s a bit like being a sculptor with a very limited set of tools. An actuary will sit down with the data and, based on intuition and experience, start making decisions. They might say, “I believe drivers aged 20-25 behave differently from those aged 26-30,” so they manually create those bins. Then they might hypothesize that the effect of a driver’s location changes based on the type of vehicle they own. Each one of these hypotheses has to be individually built and tested. It’s an incredibly labor-intensive cycle of guess, build, and check. The subjectivity is the real issue. An experienced actuary in your Paris office might group variables differently than a colleague in Brussels, not because one is right and the other is wrong, but because their experiences and judgments differ. This makes it incredibly difficult to standardize your modeling approach across the organization, leading to inconsistencies and a process that is far from scalable. You end up with models that are as much a reflection of the modeler’s personal choices as they are of the underlying data.

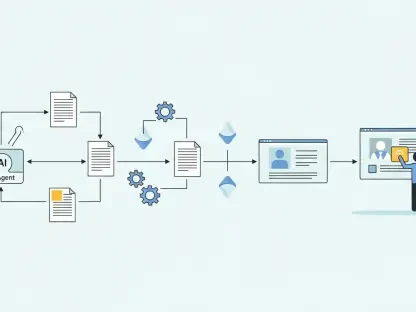

Automated GLM tools are designed to capture non-linear effects and variable interactions without manual intervention. Could you walk us through the technical process of how a model automates this feature engineering, and what are the biggest performance gains you typically see as a result?

This is where the real leap forward happens. Instead of relying on manual guesswork, an automated GLM platform like the one from Earnix systematically tackles the problem. For non-linear effects, it doesn’t start with broad, assumed bins. Instead, it begins with very granular groupings of a variable and then uses the data itself to decide where to merge them. The algorithm finds the natural break points, which is a much more objective and powerful approach. For interactions, it intelligently searches for combinations of variables that have a high predictive impact, preventing the model from becoming overly complex while still capturing those critical relationships. It even applies “smart grouping” to variables with many categories, like car models, clustering similar ones together to create a more stable and interpretable result. The performance lift we see is often staggering. In benchmark tests on French and Belgian motor liability data, these automated GLMs delivered accuracy that was remarkably close to complex “black box” techniques like gradient-boosting, while consistently blowing past other traditional interpretable methods. You’re capturing the lion’s share of the performance gains of advanced machine learning, but without stepping outside a governed framework.

A key advantage of automated GLM approaches is that the output remains a transparent, reviewable model. Can you explain the practical steps a team would take to audit, test, and adjust these outputs before deployment, and why this is crucial for satisfying governance requirements?

This is the most critical point for adoption in a regulated industry. The magic is that after all that sophisticated automation, the final output is still a standard, fully transparent GLM. A modeling team receives a model where they can see every coefficient and every variable, just as they always have. The audit process is therefore familiar. The first step is to review the coefficients to ensure they make business sense—for example, does risk increase with a certain behavior as expected? Then, they can stress-test the model against different scenarios. Crucially, they retain the ability to manually adjust coefficients if needed for business or regulatory reasons. Because the output fits directly into existing infrastructures like rating tables and pricing workflows, deployment is seamless. You can literally print out the model’s structure and hand it to an auditor or regulator. It’s this ability to maintain complete human oversight and fit into established governance processes that makes it a pragmatic innovation rather than a disruptive one.

Benchmark results suggest automated GLMs can achieve performance close to that of complex machine learning techniques like gradient-boosting. For insurers, what are the operational and competitive implications of achieving this significant performance lift while remaining within a familiar, regulator-friendly modeling framework?

The implications are massive. Operationally, you’re collapsing a modeling process that took weeks of manual labor into a much shorter, more efficient cycle. This frees up your highly skilled actuaries and data scientists to focus on strategic analysis rather than tedious feature engineering. Competitively, it’s a game-changer. An insurer that can more accurately price risk can offer better rates to lower-risk customers, attracting a more profitable portfolio while avoiding adverse selection. Achieving that accuracy lift—getting so close to the performance of gradient-boosting—without having to fight the “black box” battle with regulators is the holy grail. It means you can modernize your core pricing and risk models now, without abandoning the trust you’ve built with supervisors. This approach effectively dissolves the long-standing trade-off between accuracy and interpretability, giving insurers a clear, pragmatic path to embedding more sophisticated data science into their most critical functions.

What is your forecast for how automated and hybrid modeling approaches will reshape the roles of actuaries and data scientists in the insurance industry over the next five years?

I believe we’re on the cusp of a significant evolution, not a replacement. These automated and hybrid tools will elevate the roles of both actuaries and data scientists. For actuaries, the drudgery of manual feature engineering will fade, allowing them to shift their focus toward higher-level strategic thinking. They will become the arbiters of model fairness, the interpreters of complex model outputs for business stakeholders, and the guardians of regulatory compliance in an increasingly automated world. Their deep domain expertise will be more valuable than ever. For data scientists, they’ll be freed from the constraints of having to “dumb down” their models for regulatory review. They can focus on integrating new data sources, experimenting with sophisticated techniques within these new hybrid frameworks, and pushing the boundaries of what’s possible, knowing the final output will remain interpretable. Essentially, we’ll see a convergence where actuaries become more data-savvy strategists and data scientists become more fluent in the language of business risk and regulation. The technology will handle the heavy lifting, allowing the human experts to do what they do best: think critically and make sound judgments.