Insuring a technology engineered for superior precision against its own potential for error presents a compelling paradox, yet this very concept is rapidly materializing into a critical financial product for high-stakes industries. As organizations increasingly delegate core decision-making to algorithms, particularly in sectors like finance and lending, the significance of “AI model risk” has escalated from a theoretical concern to a tangible liability. The reliance on these automated systems introduces a new category of operational risk that demands a sophisticated management solution. This analysis will dissect the emerging market for AI model risk insurance, examine its real-world applications through a functioning ecosystem, analyze the viewpoints of industry experts pioneering this space, and project the future trajectory of this innovative frontier in risk management.

The Emergence of a Specialized Insurance Market

Defining the Scope and Structure of Coverage

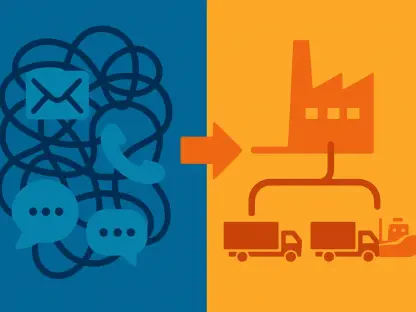

A significant trend is taking shape in the form of specialized insurance products designed specifically to cover financial losses originating from AI model failures. This nascent market is not offering a broad safety net but a highly targeted one. The core of these policies is the concept of covering “excess errors,” a framework that meticulously isolates the pure technology-related risk. It distinguishes between losses caused by an AI model’s underperformance and those stemming from conventional market volatility, macroeconomic shifts, or standard credit risks, which are explicitly excluded. This precision allows insurers to underwrite a specific, identifiable peril: the failure of the algorithm itself.

This innovative approach to coverage is often built on a “parametric-style” design, a structure that streamlines the claims process dramatically. Instead of a lengthy and complex indemnity investigation to prove damages after an event, a parametric policy is triggered when a pre-defined metric is met. In this context, a payout occurs if the AI model’s performance, such as its predictive accuracy in loan defaults, falls below a contractually agreed-upon threshold. This structure transforms a potentially ambiguous technological failure into a clear, measurable, and insurable event, providing clients with greater certainty and faster resolution.

The AI Insurance Ecosystem in Action

The U.S. mortgage lending sector provides a compelling case study of this new insurance ecosystem in action, showcasing a symbiotic collaboration between technology providers, financial institutions, and risk underwriters. At the center is MKIII, a fintech firm that provides an AI-powered screening platform to credit unions and banks. This platform automates creditworthiness assessments, with the AI model making the vast majority of decisions. Critically, MKIII bundles AI model risk insurance directly into its service, making risk assurance a core part of its value proposition.

This arrangement creates a powerful incentive for lenders. The primary benefit they gain is “capital relief.” With an insurance policy backstopping potential losses from the AI’s errors, lenders can persuade regulators to let them reduce the amount of capital they must hold in reserve against their loan portfolios. This liberated capital can then be deployed to increase lending volume, stimulating economic activity. This entire system is made possible by third-party validators like Armilla, which assesses the AI model’s reliability for insurers, and the financial backing of global reinsurers such as Munich Re and Greenlight Re, who provide the capacity to underwrite this novel risk.

Industry Insights on Pricing Probabilistic Risk

The insurability of artificial intelligence hinges on a fundamental shift in perspective, as articulated by specialists like Michael von Gablenz of Munich Re. The core philosophy is that AI models are inherently probabilistic systems, not deterministic machines. This means a certain rate of error or “hallucination” is not a sign of a complete breakdown but an expected and quantifiable characteristic of the technology. This acceptance is crucial because it reframes AI fallibility from an uninsurable flaw into a manageable risk.

Based on this understanding, the underwriting strategy becomes remarkably direct. The insurance premium is directly correlated to the AI model’s expected error rate, which is determined through rigorous third-party assessment. A model with a higher probability of mistakes will naturally command a higher premium, turning risk into a priceable commodity. This forward-thinking approach stands in stark contrast to a counter-trend seen elsewhere in the industry, where some insurers are actively seeking to add AI-related exclusions to general liability policies. This caution stems from a fear of “aggregation risk,” where complex and correlated claims could arise if a single, widely used algorithm fails, creating a cascade of losses that are difficult to predict and manage under traditional frameworks.

Future Outlook and Broader Implications

The rise of AI model risk insurance signals a broader trend toward the formalization of technology-related liabilities, a necessary evolution in risk management for the digital age. As algorithms become more deeply integrated into the economy, their potential for failure can no longer be an unmanaged externality. This development presents both opportunities and challenges for the insurance industry. Insurers must now develop entirely new methodologies for product design and risk assessment that are tailored to the unique dynamics of technology performance rather than historical loss data.

A significant challenge on the horizon is managing aggregation risk. The widespread adoption of a single popular AI platform or a flawed software update pushed to thousands of clients could trigger widespread, simultaneous losses, posing a systemic threat that underwriters must learn to model and mitigate. Furthermore, this trend underscores the urgent need for proactive engagement between insurers, technology firms, and regulatory bodies. Establishing clear and consistent frameworks for liability, transparency, and accountability in automated decision-making is essential to foster market confidence and ensure the sustainable growth of both AI innovation and the insurance products designed to support it. This early market activity is a leading indicator of how financial and risk management industries will need to adapt to an economy fundamentally reliant on algorithms.

A New Frontier in Risk Management

The analysis of this emerging market revealed that a viable and functional structure for AI model risk insurance was successfully established. This new class of insurance provided tangible economic benefits, most notably in the form of capital relief for financial institutions, which in turn enabled increased lending and economic activity. The development confirmed that even the complex, probabilistic risks inherent in artificial intelligence can be quantified, priced, and transferred.

This formalization of AI risk management stands as a critical tool for organizations navigating the operational challenges of adopting advanced technologies in critical industries. The collaborative ecosystem of fintech providers, independent assessors, and global reinsurers demonstrated a clear pathway for managing these new liabilities. As AI systems become more powerful and pervasive, this form of specialized insurance, once considered a niche product, is poised to evolve into a foundational pillar of modern corporate governance and a key enabler of responsible technological innovation.